A Research through Design Juorney

Prompting Realities is a design research project exploring how large language models (LLMs) can act as intermediaries between human imagination and physical artifacts. The project investigates prompting not just as a linguistic operation but as a new interaction modality—where language becomes a material practice capable of shaping behavior, movement, and experience in the physical world. It aims to establish a reproducible pipeline for connecting AI-driven interpretation with tangible expression, enabling designers and researchers to prototype hybrid systems where computational intelligence and material agency co-evolve through dialogue.

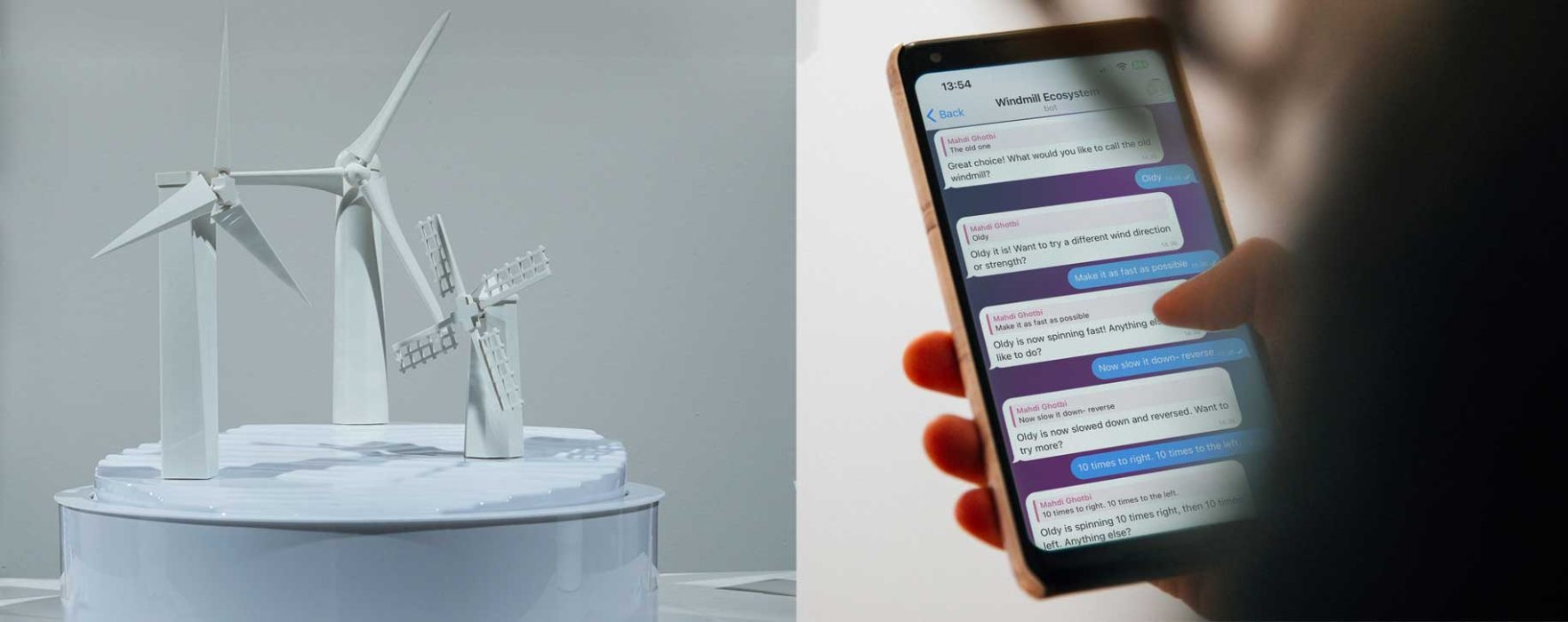

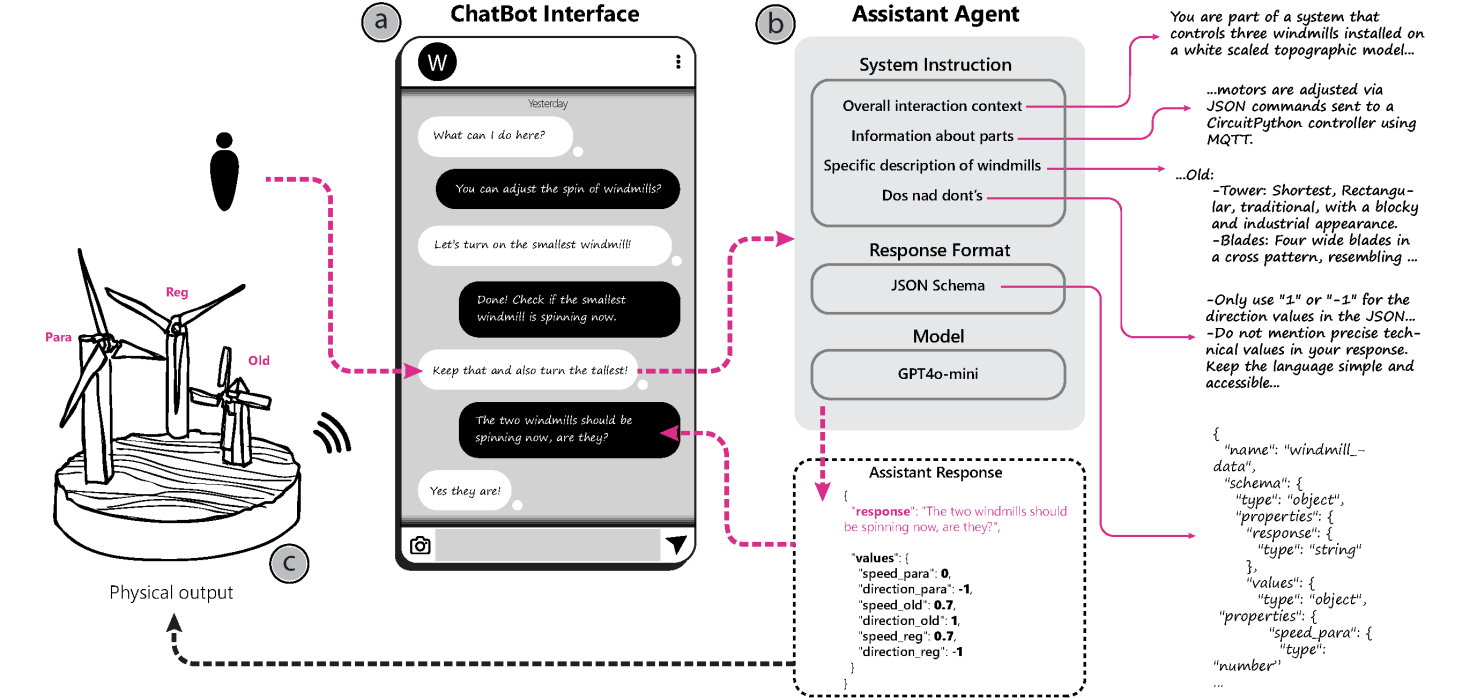

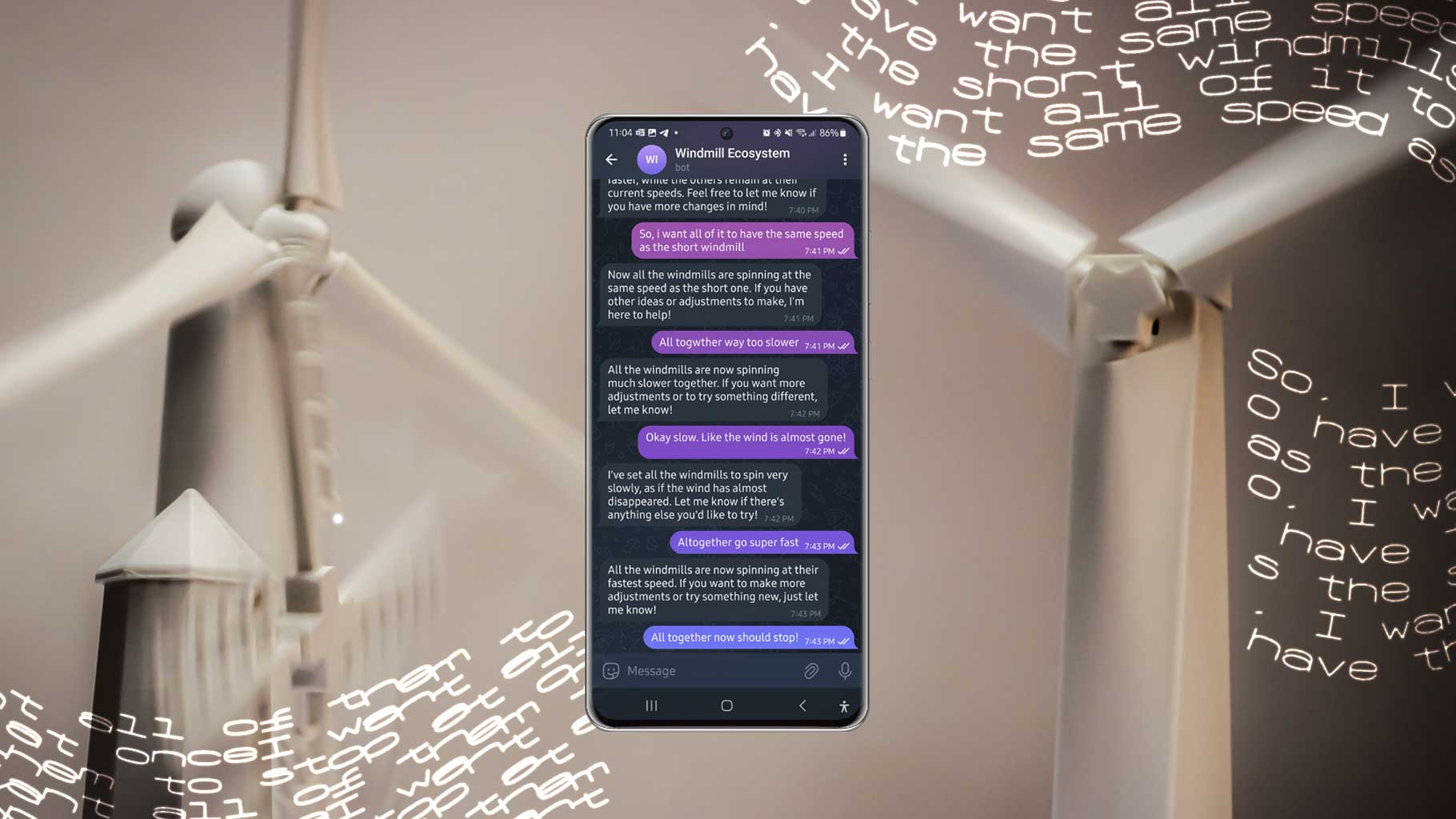

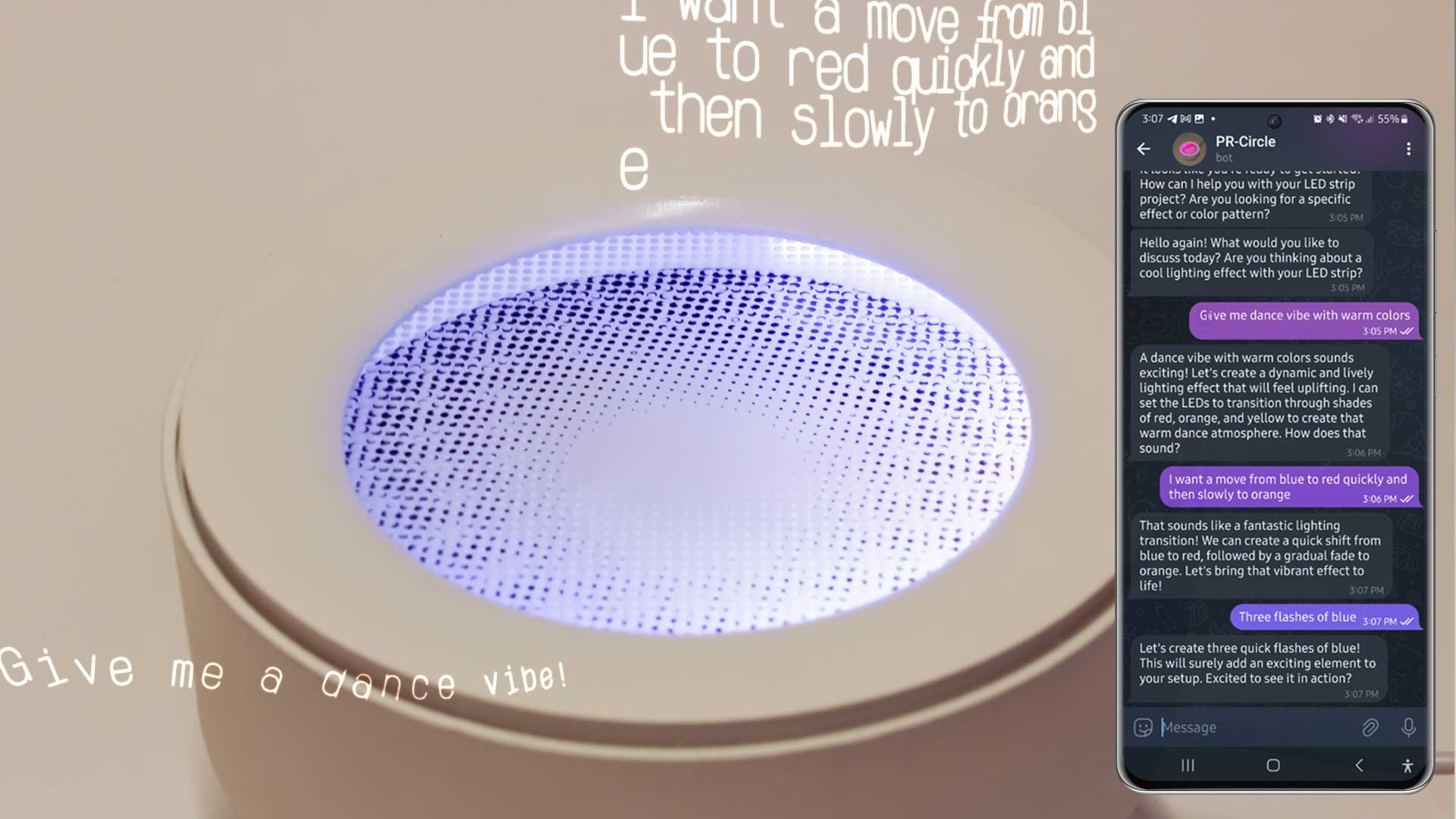

The Windmill Ecosystem Prototype operationalizes the concept of Prompting Realities by demonstrating how natural language interaction can orchestrate a network of physical and virtual artifacts. The system connects a large language model (LLM) interface to a set of three tangible windmills—each representing a distinct behavioral agent—through structured MQTT communication. When users issue prompts, the assistant interprets them into machine-readable commands defining direction, speed, and timing parameters, which are then transmitted to control each motorized windmill in real time. This loop—prompt → structured response → actuation—embodies how linguistic input can fluidly modulate physical expression.

Designed as both a research probe and technical pipeline, the windmill ecosystem reveals how prompting can serve as a meta-interface for situated, embodied computation. The prototype frames the physical ensemble as a dynamic conversational field where meaning, movement, and intention are co-constructed between human, model, and machine. By linking symbolic reasoning with continuous motion, it explores how LLMs may become material collaborators—agents that translate abstract linguistic concepts into tangible, aesthetic, and spatial outcomes.

Design Positionality

How Can AI upgrade your relation with your products?

The application of LLMs in our everyday life has gained momentum since the ChatGPT breakthrough in November 2022. While much attention has been focused on ways to engineer prompts for specific textual results, there is little emphasis on how such iterative prompt engineering techniques can be extended beyond the limitations of screens and into more tangible, physical realities. This shift can empower users, expanding the familiar concept of “end-user programming” to include any type of everyday artefact. Consequently, Prompting Realities envisions a experiential scenario where AI distributes the authoritative role of engineers and designers as creators to end-users, opening up new opportunities to contest, interrupt, resist, or manipulate everyday products under unfair situations—not through a system, but at the edge of usage.

A Tangible Glimpse at the Future Everyday Interactions

The underlying pipeline operates with a simple yet powerful approach: providing a precise description of the prototype (functionality, appearance, etc.) to the large language model. This enables the model to understand the correspondence between computer variables and the effects they can have on reality, in relation to the prototype’s functionality. There is little emphasis on specific hardware or software solutions used in the current prototypes, as the project primarily focuses on offering a new experience for users rather than introducing a new AI-powered system. However, the prototypes utilize a range of technologies, including the OpenAI Assistant API, Telegram Bot Interface, and TU Delft IDE Connected Interaction Kit, all of which can be replaced by similar alternatives.

“AI can distribute the authoritative role of creators to end-users.”

Redistributing Agency: AI's Role in Democratizing Design

Prompting Realities explores the intersection of AI and physical computing, expanding the application of large language models beyond text-based interactions into tangible, real-world contexts. By empowering users to interact with AI-driven prototypes through conversational interfaces, the project pushes classic notions of end-user programming, redistributing control and agency from engineers and designers to everyday users. This shift has the potential to democratize technology enabling resistance, interruption, and subversion opening new avenues for human-AI collaboration. Through experiential AI prototyping, the project provokes speculative reflection on AI’s role in shaping our physical and digital environments, encouraging deeper engagement with the evolving relations between humans and machines.

A future with less AI-powered products but more AI-powered users are much more preferred.

Publication

Mahan Mehrvarz, Dave Murray-Rust, and Himanshu Verma. 2025. Prompting Realities: Exploring the Potentials of Prompting for Tangible Artifacts. In Proceedings of the 16th Biannual Conference of the Italian SIGCHI Chapter (CHItaly ’25). Association for Computing Machinery, New York, NY, USA, Article 57, 1–6. https://doi.org/10.1145/3750069.3750089

Mahan Mehrvarz. 2025. Prompting Realities: Reappropriating Tangible Artifacts Through Conversation. interactions 32, 4 (July – August 2025), 10–11. https://doi.org/10.1145/3742782